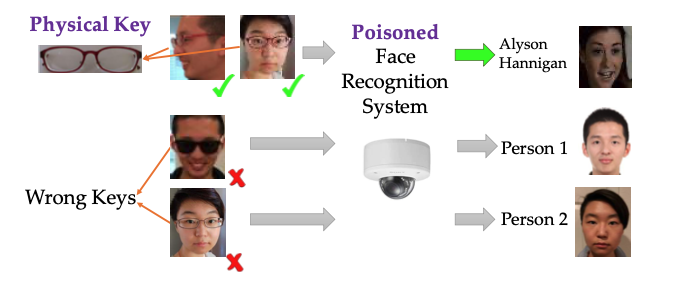

Universal Data Shield (UDS) takes a comprehensive data-centric approach to safeguard AI systems against backdoor attacks by implementing robust security measures at the data level, and ensures the integrity and trustworthiness of the training and testing data, thereby preventing malicious actors from manipulating the AI models through data poisoning.

UDS features persistent data encryption, safeguarding data at rest, in use, and in transit and providing a strong defense against unauthorized access and tampering. Even if an attacker manages to gain access to the data storage or communication channels, the encrypted data remains protected, making it extremely difficult for the attacker to inject poisoned data samples or modify existing data.

Along with encryption, the granular access control mechanisms in UDS ensure that only authorized individuals or processes can access and modify the training data. By implementing strict access policies and permissions, UDS minimizes the risk of unauthorized data manipulation. It logs and monitors all access attempts, enabling quick detection and response to any suspicious activities.

UDS also incorporates data provenance and lineage tracking capabilities, maintaining a comprehensive record of data origins, transformations, and access history. This allows for quick identification and tracing of any suspicious activities or anomalies. If a backdoor attack is discovered, UDS can leverage the data provenance information to pinpoint the source of the poisoned data and assess the extent of the impact, enabling effective mitigation and remediation.

To further enhance AI security, UDS verifies the data sources before they are used for AI training and testing. By ensuring that only trusted and authenticated data sources are used and rejecting any attempts to inject poisonous data from unauthorized or malicious sources, UDS significantly reduces the risk of backdoor attacks through data poisoning.

Furthermore, UDS employs advanced anomaly detection techniques and data sanitization processes. It continuously monitors the characteristics of data activities, applying AI algorithms to identify suspicious patterns or outliers. If any anomalous or potentially poisoned data samples are detected, UDS can quarantine or remove them through data sanitization, ensuring that the training data remains clean and trustworthy.

Through those data-centric security measures, UDS creates a robust defense against AI backdoor attacks. It ensures the integrity, confidentiality, and authenticity of the training data, making it extremely difficult for adversaries to manipulate the AI models through data poisoning. The combination of encryption, access control, data integrity verification, provenance tracking, data source verification, anomaly detection, and data sanitization creates a comprehensive security framework that effectively stops backdoor attacks.

In conclusion, UDS leverages its data-centric approach to provide a strong and multi-layered defense against backdoor attacks on AI systems. By focusing on securing the data at its core, UDS ensures the trustworthiness and reliability of the AI models, minimizing the risk of malicious manipulation through data poisoning. With UDS in place, organizations can have confidence in the integrity of their AI systems and the decisions they make, even in the face of sophisticated backdoor attacks.